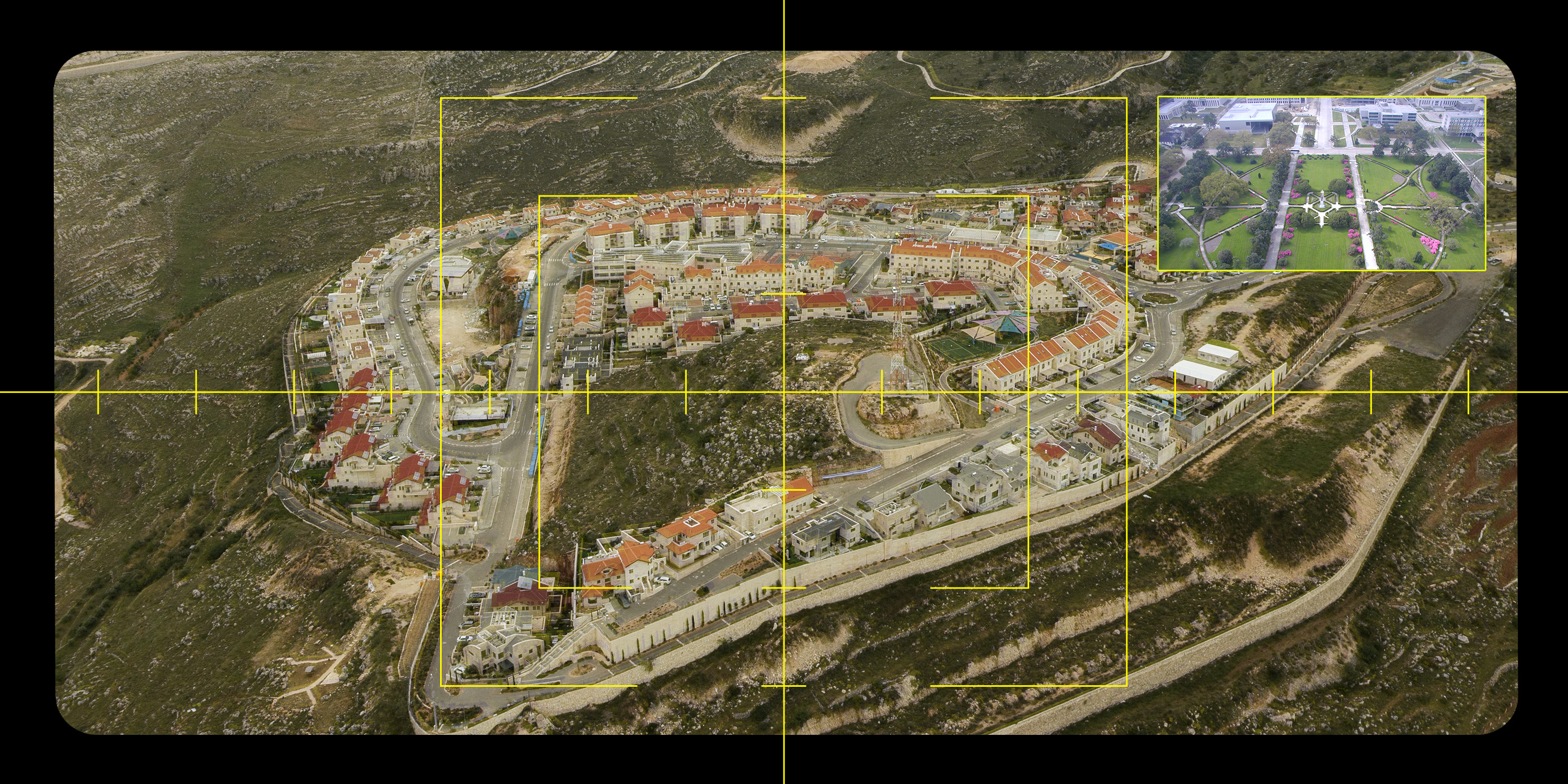

Worried about a potential war with China, the Pentagon is turning to a new class of weapons to fight the numerically superior People’s Liberation Army: drones, lots and lots of drones.

In August 2023, the Defense Department unveiled Replicator, its initiative to field thousands of “all-domain, attritable autonomous (ADA2) systems”: Pentagon-speak for low-cost (and potentially AI-driven) machines — in the form of self-piloting ships, large robot aircraft, and swarms of smaller kamikaze drones — that they can use and lose en masse to overwhelm Chinese forces.

Earlier this month, two Pentagon offices leading this charge announced that four nontraditional weapons makers had been chosen for another drone program, with test flights planned for later this year. The companies building this “Enterprise Test Vehicle,” or ETV, will have to prove that their drone can fly over 500 miles and deliver a “kinetic payload,” with a focus on weapons that are low-cost, quick to build, and modular, according to a 2023 solicitation for proposals and a recent announcement from the Air Force Armament Directorate and the Defense Innovation Unit, the Pentagon’s off-the-shelf acceleration arm. Many analysts believe that the ETV initiative may be connected to the Replicator program. DIU did not return a request for clarification prior to publication.

The new robot planes will mark a shift from the Defense Department’s “legacy” drones which DIU says are “over-engineered” and “labor-intensive” to produce. The four contractors chosen for the program are Anduril Industries, Integrated Solutions for Systems, Leidos Dynetics, and Zone 5 Technologies, which were selected from a field of more than 100 applicants.

The goal is to choose one or more variants of what look to be suicide drones (weapon-makers prefer “loitering munitions”) that can be mass produced through “on-call” manufacturing and churned out in quantity as needed. (DIU did not offer clarification on whether all prototypes are expected to be strictly kamikaze aircraft.) These drones will likely be smaller than the MQ-1 Predator and MQ-9 Reaper drones — which were used extensively as ground-launched, reusable, missile-firing assassination weapons during the first decades of the war on terror — and more versatile, since the new ETVs must include an air-delivered variant that can be dropped or launched from cargo aircraft.

For the last 25 years, uncrewed Predators and Reapers, piloted by military personnel on the ground, have been killing civilians across the planet, from Afghanistan and Libya to Syria and Yemen.

“The clear danger is that these drones will be used at a greater scale, raising questions about the possibility of civilian harm.”

To highlight just one instance, a 2018 U.S. drone strike in Somalia killed at least three, and possibly five, civilians — including 22-year-old Luul Dahir Mohamed and her 4-year-old daughter Mariam Shilow Muse — as revealed by a 2023 investigation by The Intercept, prompting two dozen human rights organizations and five members of Congress to call for the Pentagon to compensate Luul and Mariam’s family for the deaths.

Experts worry that mass production of new low-cost, deadly drones will lead to even more civilian casualties. “The clear danger is that these drones will be used at a greater scale, raising questions about the possibility of civilian harm,” Priyanka Motaparthy, the director of the Project on Counterterrorism, Armed Conflict and Human Rights at Columbia Law School’s Human Rights Institute, told The Intercept. “We need to know if these drones might be used in situations that put civilians at risk. We need to know how risks will be assessed.”

While U.S. drones have relied on human operators to conduct lethal strikes — many times with disastrous results — advances in artificial intelligence have increasingly raised the possibility of robot planes, in various nations’ arsenals, selecting their own targets.

Electronic jamming by Russia in the Ukraine war has spurred a shift to autonomous drones that lock on a target and continue their mission even when communications with a human operator have been severed. Last year, the Ukrainian drone company Saker claimed its fully autonomous Saker Scout was using AI to identify and attack 64 different types of Russian “military objects.”

Ukraine has employed as many as 10,000 low-cost drones per month to counter the Russian military’s advantage in forces. Pentagon officials see Ukraine’s drone force as a model for countering the larger military of the People’s Republic of China. “Replicator is meant to help us overcome the PRC’s biggest advantage, which is mass,” said Deputy Secretary of Defense Kathleen Hicks, one of the officials overseeing that program.

Last month, the Pentagon announced it would “accelerate fielding of the Switchblade-600 loitering munition” — a kamikaze anti-armor drone from contractor AeroVironment that flies overhead until it finds a target — that has been used extensively in Ukraine. “This is a critical step in delivering the capabilities we need, at the scale and speed we need,” said Adm. Samuel Paparo, commander of Indo-Pacific Command, or INDOPACOM.

At a recent NATO conference, Alex Bornyakov, Ukraine’s deputy minister of digital transformation, discussed the potential for using AI and a network of acoustic sensors to target a Russian “war criminal” for assassination by autonomous drone. “Computer vision works,” he said. “It’s already proven.”

The use of autonomous weapons has been subject to debate for over a decade. Since 2013, the Stop Killer Robots campaign, which has grown to a coalition of more than 250 nongovernmental organizations including Amnesty International and Human Rights Watch, has called for a legally binding treaty banning autonomous weapons.

Pentagon regulations released last year state that fully- and semi-autonomous weapons systems must be used “in accordance with the law of war” and “DoD AI Ethical Principles.” The latter, released in 2020, only stipulate, however, that personnel will exercise “appropriate” levels of “judgment and care” when it comes to developing and deploying AI.

For the last century, the U.S. military has conducted airstrikes demonstrating a consistent disregard for civilians.

But “care” has never been an American hallmark. For the last century, the U.S. military has conducted airstrikes demonstrating a consistent disregard for civilians: casting or misidentifying ordinary people as enemies; failing to investigate civilian harm allegations; excusing casualties as regrettable but unavoidable; and failing to prevent their recurrence or to hold troops accountable.

During the first 20 years of the war on terror, the U.S. conducted more than 91,000 airstrikes across seven major conflict zones — Afghanistan, Iraq, Libya, Pakistan, Somalia, Syria, and Yemen — and killed up to 48,308 civilians, according to a 2021 analysis by Airwars, a U.K.-based airstrike monitoring group.

The Defense Department repeatedly misses its deadline for reporting the number of civilians that U.S. operations kill each year, the lowest bar for accountability for its actions. Its 2022 report was issued this April, a year late. The Pentagon blew its congressionally mandated deadline for the 2023 report on May 1 of this year. Last month, The Intercept asked Lisa Lawrence, the Pentagon spokesperson who handles civilian harm issues, why the 2023 report was late and when to expect it. A return receipt indicates that she read the email, but she failed to offer an answer.

At least one of the new drone prototypes will go into full production for the military, based on how Special Operations Command, INDOPACOM, and others evaluate their performance. The winner, or winners, of the competition will be chosen to “continue development toward a production variant capable of rapidly scalable manufacture,” according to DIU.

A drone scale-up in the absence of accountability worries Columbia Law’s Motaparthy. “The Pentagon has yet to come up with a reliable way to account for past civilian harm caused by U.S. military operations,” she said. “So the question becomes, ‘With the potential rapid increase in the use of drones, what safeguards potentially fall by the wayside? How can they possibly hope to reckon with future civilian harm when the scale becomes so much larger?’”

Latest Stories

AIPAC Millions Take Down Second Squad Member Cori Bush

Bush was early calling for a ceasefire in Israel's war on Gaza. Then AIPAC came after her with millions of dollars.

The U.S. Has Dozens of Secret Bases Across the Middle East. They Keep Getting Attacked.

An Intercept investigation found 63 U.S. bases, garrisons, and shared facilities in the region. U.S. troops are “sitting ducks,” according to one expert.

What Tim Walz Could Mean For Kamala Harris’s Stance on Gaza and Israel

Walz allows for Harris to “turn a corner” in her policy on the war in Gaza, said James Zogby, president of Arab American Institute.